The Social Imaginaries of Buffered Media

Kevin Hamilton / University of Illinois

In a recent piece for the Design Observer, Shannon Mattern surveyed how scholars, artists and activists are working today to render visible a variety of ubiquitous but invisible infrastructures. Citing among others Flow contributor Lisa Parks, Mattern connects active, involved citizenship to not only reading about the physical structures that support modern life, but sensing them through sight, hearing, touch, even taste.

For consumers of streaming media, it is often easier to sense these structures than to make sense of them. Through progress bars, lags, skips and sync problems, streaming media consumers constantly experience the physicality of networks, as files load into and out of memory at each stage of transfer from host to client, or as packets drop at congested points in the flow. What exactly are we listening to in these moments? What are we seeing when the file is loading?

Scholars of technology have discussed how such breakdowns manifest a kind of revelation for citizens, a necessarily rare occurrence for technologies that depend on ubiquity, and therefore invisibility, for their power. But in the case of “broken” media streams, there’s no small amount of debate about what these moments reveal. Even a slightly closer look at the increasingly common phenomenon of media buffering reveals some useful clarifications about the nature of the gaps in the network, the changing stakes of temporality, and the persistent role of geographical proximity in media distribution.

When explaining the hiccups in digital streams, many of us go to analogies from automotive traffic, imagining a crowded freeway. Such congestion metaphors are not always apt, however, and in many ways conflate two distinct properties of internet connections – that of throughput and latency. An abundance of technical articles (and long argumentative comment threads) exist to parse these out in detail, but for now let’s just take as a given that there do exist two influential factors in how smoothly your media flow.

Throughput is where the freeway analogy makes the most sense. One can measure the throughput of an internet connection in terms of how much data per second can actually move through a “pipe.” Throughput increases as the size or quantity of pipes increases. Just as squeezing a large object through a small hole will take some time, loading a large media file through a low-throughput medium or connection will result in buffering.

The latency of an internet connection refers not to the quantity of information a particular “pipe” can accommodate, but to the time it takes for a packet of information to travel through said pipe. This is where the freeway analogy doesn’t quite hold. The rate at which information flows through a single fiber-optic connection, for example doesn’t really vary – it travels at the speed of light.

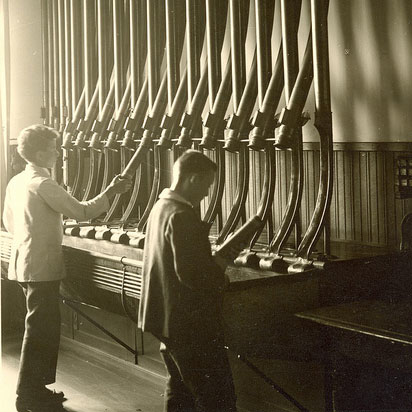

A better metaphor would be an old pneumatic tube transport system. Imagine a single large building with two autonomous mail tube systems, connected by a room where human workers transferred messages from one system to the other.

You could define the throughput of the system from the perspective of a single sender in terms of the number of tubes that reach her office. Adding a tube to a room, for example, could double the capacity of messages sent in the same amount of time. The latency of the system from the perspective of that same sender could be defined in terms of the speed of the vacuum that pulls capsules through the building, or in terms of the length of the total journey a message has to undertake. Messages sent across a short distance would have lower latency than those sent over a long distance, and increasing the power of the vacuum in the system would decrease latency overall.

Where the latency and throughput grow more interdependent is in the room where the building’s two systems meet. As messages reach capacity, the volume of items to transfer grows, and a queue might stack up – introducing new latency to the transaction, a delay that will only grow with the addition of new tubes, unless you also add new workers.

Now imagine this pneumatic tube system proliferating until you have not only scores of such autonomous systems, but scores of rooms designed for transfer from one system to the other. That’s pretty much the internet – a world always demanding new tubes, and new connections between systems, thus offering abundant opportunities for latency as well as throughput limitations.

This separation of “congestion” into latency and throughput is important for reasons of policy. To continue the pneumatic metaphor, a company might argue that the system pushes everyone’s messages at the same speed, but perhaps workers at the “transfer station” are flagging certain messages to jump the queue at busy moments. (Perhaps a collective might even argue for such prioritization, in the interest of particular groups or purposes.)

From the perspective of media studies, the parsing of latency and throughput is also important for how it helps identify some different sites of contention in arguments over a shared, largely invisible resource. As consumers turn their attention to different sites within this network, we might expect to see different “social imaginaries” emerge in response to the lags or breaks.

When faced with “laggy” gaming, awkward turn-taking in voice chat, or badly buffered video, where does our frustration turn, and where do we potentially seek action? Many turn to throughput problems and curse the service provider, conducting speed tests to prove bias against particular platforms or even geographies. Others assume steady throughput, suspect a latency problem at the source or destination, and either switch platforms or upgrade their devices. Still others may turn their frustration to their network neighbors, complaining of bandwidth hogs. All such perspectives and more might have good technical bases, but might also be wholly unfounded. Just as radio once conjured particular imaginaries of co-listeners and the spaces they shared, our new streaming media lend themselves to all manner of myth-making that can influence use and even identity-construction.

By most technical accounts (and even these may still be wrong), the recent agreement between Comcast and Netflix seems to have addressed a throughput problem through an effort at latency reduction. Where many net neutrality advocates worried that Netflix was paying Comcast to give them faster throughput, the agreement is more oriented around removing a middle agent that was introducing latency into the system. Cogent, the company that transported Netflix data to Comcast for delivery to consumers, was not keeping up with demand – not enough staff in the tube transfer room, so to speak – so Netflix worked out a deal to tie their system more integrally to Comcast’s.

Advocates of net neutrality worried that this might be the first in a series of new arrangements, including those hinted at by the past week’s rumored FCC policy changes, where a provider pays for faster throughput on the same network that “throttles” or “caps” others. Images of paid highway “fast lanes” come to mind, and that could very well happen, through granting of fatter “tubes” to certain providers.

What we will likely see instead, in the Comcast-Netflix arrangement at least, is prioritization of some material over others at the packet level within transfer queues. The better analogy here than the interstate fast lane would be our pneumatic tube transfer station, in which certain messages get yanked from the back of the queue and given new priority for passage to the next juncture. Comcast customers using Netflix won’t likely get “faster pipes,” but rather lower latency – possibly at the expense of others, though this remains to be seen.

An interesting thing about latency is that unlike throughput, it has a hard limit determined by the transfer medium – a signal over fiber-optic can’t travel faster than light. Badly buffered media can speak of these limits, or of the effects transferring signals between different kinds media – such as the move from fiber-optic media to coaxial or wireless. Though latency at such levels might be imperceptible to human eyes and ears at first, a few small delays can add up in applications that depend on synchronization – in online gaming, or video chat, for example, but also in remote medical monitoring, or financial trading.

For these reasons, a growing number of providers are moving to reduce the physical distance between their material and the Internet Service Providers that deliver it to consumers. Through “co-locating” or “peering” with Internet Service Providers – as Netflix did with Comcast – content providers literally shorten the connection between themselves and the internet, in order to reduce the latency that results from both physical and informational processes. Through their recent agreement, Netflix physically moved in with Comcast.

In a particularly concrete physical manifestation of this phenomenon, high frequency stock trading increasingly relies on facilities where each bank’s computer sits under the same roof to share a common connection to the internet. The attraction of common geo-location for algorithmic traders is a leveling of latency, accomplished through the enactment of identical physical infrastructure, down even to cable length.

That geographic proximity could still play such a prominent role in media distribution goes against some common tropes of modernization. That an emerging answer to service interruption is a blurring of infrastructural distinction between content providers and delivery networks gives us much to ponder. Next time you’re faced with the familiar animations signaling a buffering video file, try noticing where your imagination (or your frustration) lands, and consider what world might emerge from that explanation, if shared by a multitude of people like you.

Thanks to my colleagues Karrie Karahalios and Christian Sandvig at the Center for People and Infrastructures for helping me sort out some of these details.

Image Credits:

1. Buffering symbol

2. Traffic jam

3. Pneumatic tubes

4. Comcast and Netflix agreement

Bibliography:

Bright, Peter. “Video Buffering or Slow Downloads? Blame the Speed of Light.” Ars Technica. 18 Mar. 2013. http://arstechnica.com/information-technology/2013/03/youtube-buffering-or-slow-downloads-blame-the-speed-of-light/.

Grigorik, Ilya. “Latency: The New Web Performance Bottleneck – Igvita.com.” 19 Jul. 2012. http://www.igvita.com/2012/07/19/latency-the-new-web-performance-bottleneck/.

Mattern, Sharon. “Infrastructural Tourism: From the Interstate to the Internet: Places: Design Observer.” 1 Jul. 2014. http://places.designobserver.com/feature/infrastructural-tourism/37939/.

Rayburn, Dan. “Here’s How The Comcast & Netflix Deal Is Structured, With Data & Numbers – Dan Rayburn – StreamingMediaBlog.com.” 27 Feb. 2014. http://blog.streamingmedia.com/2014/02/heres-comcast-netflix-deal-structured-numbers.html.

Roettgers, Janko. “Comcast Customers Get Much Faster Netflix Streams, Thanks to Peering Deal — Tech News and Analysis.” 14 Apr. 2014. http://gigaom.com/2014/04/14/comcast-faster-netflix/.

Schneider, David. “The Microsecond Market – IEEE Spectrum.” 30 Apr. 2012. http://spectrum.ieee.org/computing/networks/the-microsecond-market/.

Sconce, Jeffrey. Haunted Media: Electronic Presence from Telegraphy to Television. Duke University Press, 2000.

“SETTLEMENT-FREE INTERCONNECTION (SFI) POLICY FOR COMCAST AS7922.” Oct. 2013. http://www.comcast.com/peering.

Star, Susan Leigh. “The Ethnography of Infrastructure.” American Behavioral Scientist 43.3 (1999): 377 –391. Highwire 2.0. Web. 29 Jan. 2012.

Please feel free to comment.